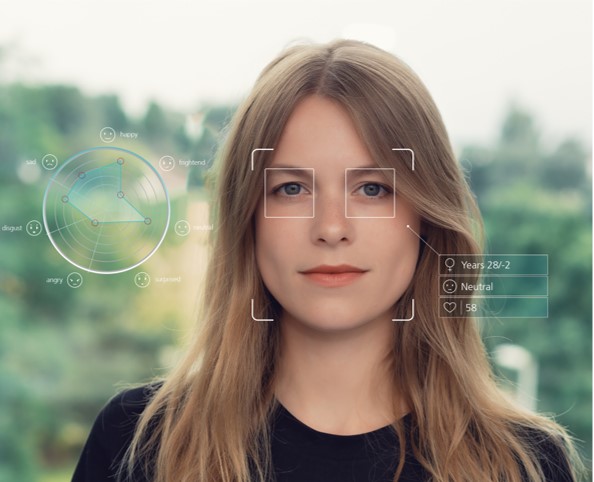

Facial expressions often convey more than words. They are a manifestation of our emotions, both conscious and unconscious, and can either complement or contradict what we are saying verbally. In situations where it’s important to detect these unconscious or contradictory emotional expressions, such as in market research or UX design, facial analysis software can provide valuable insights. In this interview, we’ll be talking with Dominik Seuß, Group Manager of Facial Analysis Solutions at Fraunhofer IIS, to learn more about his approach on real-time face tracking technologies and where facial detection and emotion analysis in real-life applications.

Dominik, can you highlight some of the topics that you and your group Facial Analysis Solutions are working on?

Dominik Seuß: Our primary focus is professional software development. That means that we develop software solutions and seamlessly integrate them into our customers’ ecosystems. In terms of the Technology Readiness Level (TRL) scale, our software ranks at the highest level TRL 9, meaning our customers actively have our product in use. Our flagship product is the SHORE® software library, designed for real-time face detection, facial analysis, and the detection of psycho-physiological states.

SHORE® recently celebrated its 15th anniversary in 2022, and facial detection and emotion analysis in general have been prominent research areas at Fraunhofer IIS for quite a bit. How did facial detection and emotion analysis technologies and your team’s approach evolve over time?

It all started with the idea of detecting a single face within an image and later expanded to the detection of any number of faces. This was further enhanced by incorporating emotion recognition based on five fundamental emotions. In a next step, more emotions were included in the analysis, followed by age and gender estimation. In recent years, the focus has increasingly shifted towards detecting complex psycho-physiological states, meaning we moved beyond discrete emotions and started classifying valence and arousal. Here, our objective is to extract maximum information from the camera image, including the integration of biosignals such as respiratory rate, heart rate, and heart variability.

During the development of SHORE®, we actually prioritized two key principles from the start: firstly, it must work in real-time, and secondly, no additional hardware is required, but standard equipment like a 20€ webcam is sufficient.

I am motivated by the fact that each release brings about new possibilities and innovative functionalities that enhance the diverse value propositions for our customers.

What are some of the real world applications for a facial analysis software like SHORE®?

There is actually a wide range of applications. We’ve collaborated with a camera manufacturer, for instance, to develop face detection and tracking in video streams. This functionality enables the camera to automatically focus on the detected face.

Recently, there has been a significant focus on solutions for the automotive industry. One notable development here is the mandate for driver monitoring systems in new vehicles from 2025 onward, often implemented through camera systems. This led us to the question: What other benefits do we get from utilizing cameras beyond driver monitoring? One potential avenue is digital health. Vehicles offer a controlled environment where individuals spend substantial amounts of time, enabling the comparison of biosignal data over months and to identify long-term changes. Consequently, original equipment manufacturers (OEMs) and suppliers have recognized health and well-being as a crucial area of interest and are actively exploring ways to integrate these aspects into vehicles.

It is our commitment to maintain transparency and meet stringent data protection standards.

Our software also finds application in product testing, specifically within the gaming industry. Traditionally, electroencephalogram (EEG) caps have been used to measure valence and arousal levels during user testing. However, this requires participants to wear the EEG cap at home, which can be error-prone and time-consuming for them. With SHORE®, we can measure valence and arousal purely through camera-based methods using a standard webcam, which is more comfortable for the participants and streamlines the testing process.

Aside from face detection and analysis, we also cover the complete spectrum of data collection for AI training, the AI training process itself, and the seamless integration of the final software into consumer products. One example is an electric toothbrush and its corresponding app, where we developed an AI to track the movement and position of the toothbrush. At first glance, this doesn’t seem to be directly related to facial detection and analysis, but the underlying development processes are actually similar.

When it comes to facial analysis software, data protection is a crucial concern. What is your team’s perspective on this matter, and how do you ensure that your software solutions comply with data protection requirements?

There are two important aspects to consider here. Firstly, we prioritize the technical requirements for implementing data protection measures. For instance, in the case of SHORE®, we ensure that no video material is stored externally. Instead, SHORE® performs a real-time analysis of images or video streams. We do not have any functionality for recognition, which means we do not extract biometric features, conduct fingerprinting, or store personal data. Only metadata, such as age, gender, and emotional state, are processed and utilized by SHORE®.

The second aspect revolves around transparency and building trust with our clients. Recognizing the sensitivity of combining faces and cameras, we are committed to maintaining transparency and meeting stringent data protection standards. Our offer also includes supporting our customers in obtaining an independent data protection certification for SHORE® products.

Your team recently launched a new version of SHORE®, which incorporates WebAssembly as an essential component. Can you please elaborate on how WebAssembly works and its role in the overall data protection framework? Additionally, how does this feature enhance the user experience of SHORE®?

In the past, it was common to participate in market research or subject studies from the comfort of somebody’s home. During surveys, the participants would be recorded, and the video footage would be sent to the market research company’s server for storage and analysis. With our latest software release, SHORE® now operates directly within the browser. When users open their browser, the camera feed is still analyzed, but here’s the unique feature: It is processed locally on their own computer, tablet, or mobile device. This means that the camera feed never leaves the user’s device and is analyzed in real-time using the participant’s own hardware. Only the results are transmitted to the server, if desired.

To ensure that SHORE® runs efficiently on the user’s hardware, we have implemented it using WebAssembly. This solution offers the added benefit of running SHORE® natively in the browser without the need for any additional plugins. This enables market research clients, for example, to seamlessly integrate SHORE® into their existing survey tools with just a few lines of code. As a result, they can conduct privacy-compliant analyses, even in remote settings, plus it streamlines their testing process.

We are only scratching the surface of the possibilities. There is still much more to explore and achieve.

If you had wish list: What application or feature would you like to implement in the future for SHORE®, and what motivates you to improve and expand it?

I am motivated by the fact that each release brings about new possibilities and innovative functionalities that enhance the diverse value propositions for our customers. Looking ahead, my goal is to advance the detection of more complex human states. This includes conditions like stress or cognitive overload, which cannot be simply inferred from facial expressions alone. Additional information is required. In the past, wearables were necessary to measure the relevant parameters like respiration, heart rate, or oxygen saturation. By now, we can accomplish this contact-free, using the camera image only. That is an exciting development as it eliminates the need for various equipment and allows for the utilization of a standard webcam only. And we are only scratching the surface of the possibilities. There is still much more to explore and achieve.

Thank you, Dominik, for sharing all of these insights with us.

Image copyright: iStock/metamorworks

Add comment