One of the core components of each Adaptive Bitrate Streaming (ABR) player is the algorithm that decides on the optimal playback quality. There are tons of research papers on the topic of ABR decisioning, but most of the developed algorithms fall into one of the following categories:

- Buffer-based: Uses the current buffer level on the client side to decide which quality to download.

- Throughput-based: Uses the measured throughput of prior media segment downloads for decision-making.

- Hybrid: Combines both buffer level and throughput information to select the best quality.

Additional information such as the width and the height of the video element and the supported framerate can further improve the selection algorithm.

The various ABR configuration options of the media players are often challenging for content providers. They have to deal with questions like:

- What are the best settings for the majority of my users?

- Are all the qualities that I am encoding really required? Are some players skipping some of my representations/renditions?

- How can I test new settings or configurations and compare them to the default ones?

- How can I compare the performance of different media players with each other?

FAMIUM ABR Testbed

Ideally, we can answer all the aforementioned questions in an automated test fashion. For that reason, we implemented the FAMIUM ABR Testbed.

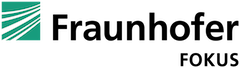

The architecture of the FAMIUM ABR Testbed is depicted below:

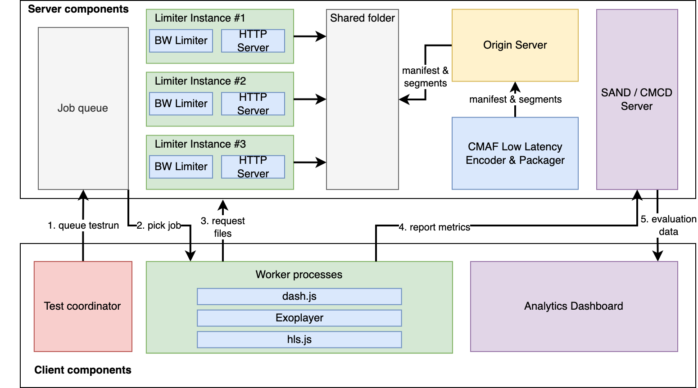

Step 1: Test Configuration

The entry point is a dedicated web page, the “Test coordinator”. It enables the selection of the desired media stream as well as the configuration of all ABR related settings of the media player (e.g. dash.js, hls.js, ExoPlayer). An example of the test coordinator is illustrated in the screenshot below:

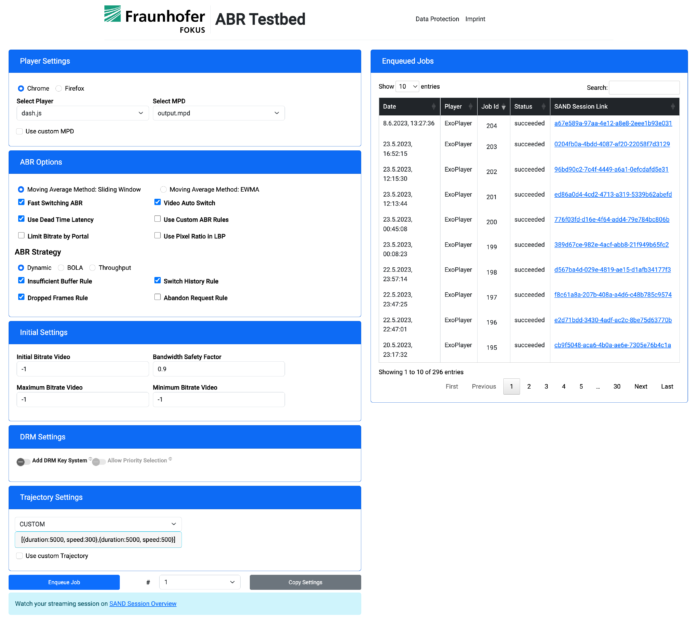

Next to the media player related configuration, a network trajectory is required for each test run. The network trajectory defines how the available bandwidth develops over time. Consequently, the media players can be challenged and tested under various network conditions. To simplify the process of creating a network trajectory, we offer a graphical tool for editing and fine-tuning.

Step 2: Test Execution

Once a testrun has been configured it is enqueued into a global job queue. Multiple “worker processes” are waiting for new jobs and process them. Depending on the length of the queue, worker processes can be dynamically added and removed. For each testrun, a worker requests media manifests and segments via a dedicated limiter instance.

The limiter instance is responsible for applying the selected network trajectory and thereby makes sure that the media players are limited in terms of the available bandwidth. The limiter instance either fetches the required files from a shared folder or proxies requests to external manifests and media segments.

Step 3: Test Evaluation

Now that the tests are being executed, we need to make sure that we save the relevant data to analyze the results and get an understanding of how the configuration options influence the overall user experience. For that reason, we integrated our FAMIUM SAND streaming analytics solution into our FAMIUM ABR Testbed.

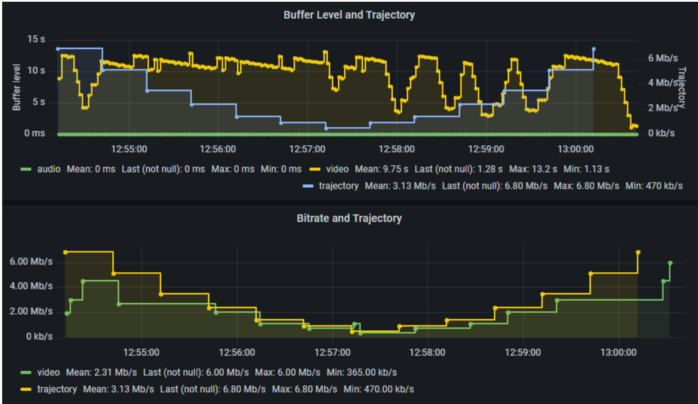

During playback, the media players are reporting metrics such as the buffer level, the selected quality and many more to the SAND/CMCD metrics server. After a testrun is completed, we can evaluate the results directly in a dashboard without looking at any raw data. An example of the buffer level and the selected bitrate compared to the network trajectory can be found below:

Summary

ABR decisioning is an essential component of a media player. The FAMIUM ABR Testbed enables the evaluation of media players under arbitrary network conditions. It is not only a very valuable tool for content providers to automatically test their streams against different media players under certain network conditions, but it will also serve as an important tool to rework the ABR logic in the upcoming version 5.0.0 of dash.js.

If you have any questions regarding our ABR and DASH activities or dash.js in particular, feel free to check out our website and contact us.