Affective computing, also known as Emotion AI, is an area of artificial intelligence that focuses on developing systems and technologies capable of recognizing, interpreting, and responding to human emotions (read more in our Affective Computing 101). In this overview article, we take a step back and look at emotions as the field’s core subject: What exactly are emotions, why do we have them, and is it possible to measure them? (Spoiler: Yes, of course it is — and even intelligent emotion analysis is possible.)

Emotion is …

Do you remember the Love is … comic strips? Each strip contains the caption “Love is …” and a thought completing it. Now try doing that with Emotion. Depending on whom you ask, you will learn aspects of what emotions are but, as surprising as it sounds, even scientists struggle finding a universal, “one size fits all” definition of emotion.

Everyone knows what an emotion is, until asked to give a definition. Then, it seems, no one knows.

Fehr, Beverly & Russell, James A. (1984). Concept of emotion viewed from a prototype perspective. Journal of Experimental Psychology: General, 113(3), 464–486.

Emotions are a complex phenomenon, involving psychological, physiological, behavioral, cognitive, social, and cultural aspects. That’s why it’s so challenging to find a universal definition for all scientific fields and research interests. We don’t want to take a deep dive into the brain at this point, however, and into the neuroscience of which brain regions are associated with individual emotions. Our main interest is the question of how emotions manifest and how we (humans and machines) register them.

Mixed emotions

Emotions are, for the most part, tied to specific objects or events. They can be triggered through social interactions, external events and stimuli, personal experiences and memories, or hormonal changes. When perceiving somebody’s emotional reactions, we intuitively recognize and interpret them: with regard to the specific situation, the person’s character, and culture.

In general, emotions surface through

- physiological reactions (e.g., an increase in heart rate, sweating, or blushing and paling due to the dilation or constriction of vessels, respectively)

- behavior (changes in facial expressions, gestures, posture, or voice pitch),

- and a subjective, more or less conscious component: “feelings”.

When we perceive somebody else’s emotions, this happens consciously and unconsciously based on various cues: the mentioned physiological reactions and the behavior of our counterpart. In scientific settings, we can measure emotions by analyzing these cues. This, however, does not mean that a machine can “read” human subjects like some dystopian scenarios might suggest. Instead, emotion analysis is the result of pattern recognition techniques: We identify and classify the physiological changes associated with different emotions and train algorithms on this data. In the next step, the trained algorithms can recognize similar patterns in new data and accurately classify emotions.

Our aim, therefore, is to collect reliable and robust emotional data, to recognize and analyze emotions as holistically as possible, and to interpret the data objectively and intelligently – for example via multimodal data acquisition and AI based analysis. While the physiological and behavioral parameters can be measured in scientifically sound settings, subjective feelings can only be accessed through questioning (e.g., interviews or a questionnaire) or self-report.

Emotion classification

Experiencing emotions is subjective and deeply personal by nature. So how is it possible to measure the unique feeling and expression of happiness, how do you quantify sadness? When we attempt to measure emotions, we need to apply an objective classification system, thus reducing the complexity of the phenomenon.

Measure what is measurable and make measurable what is not.

(ascribed to Galileo Galilei)

A crucial step in making things measurable is to define a methodology of the measuring process: Which aspects can be measured? How do we quantify and qualify them? In case of emotions, this means agreeing on which aspects can be measured (i.e., physical and behavioral reactions) and to define criteria and labels to categorize them.

Boiling down the research discussion quite a bit, we can differentiate two approaches to classify emotions and to describe their structure:

- Emotions are discrete categories.

- Emotions can be described in terms of dimensional models.

Discrete emotion theory distinguishes a small number of basic emotions, for example (and according to American psychologist Paul Ekman’s theory) happy, angry, sad, surprised, frightened, and disgusted. In reality, however, humans use and interpret a lot more than these six basic emotions – a fact recognized by compound emotion categories. Compound emotions are formed through combinations of basic emotions, for example when having a happily surprised vs. an angrily surprised look on your face. In this approach, happily surprised vs. angrily surprised constitute two distinct categories (out of 21 defined compound emotion categories all in all).

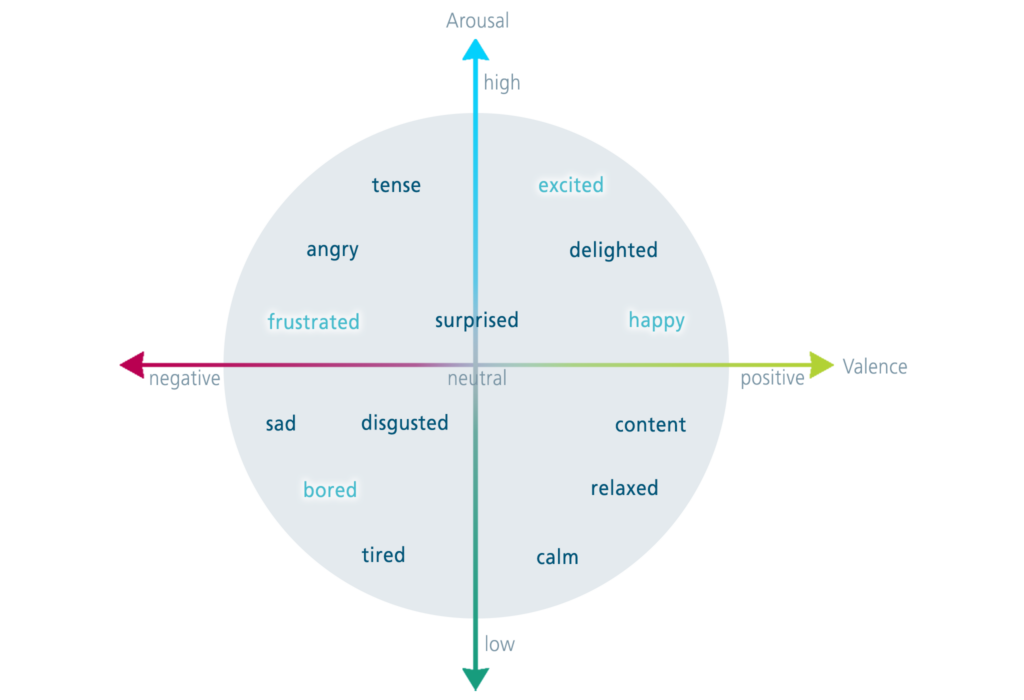

When categorizing emotions in terms of a two-dimensional model, we label whether the predominant emotion is more positive or negative (valence dimension) and the level of arousal (high vs. low). Both dimensions can be incorporated in a circumplex model of emotion, with valence and arousal as the x and y axes and the emotional states positioned at any level of these two dimensions.

Making emotions measurable

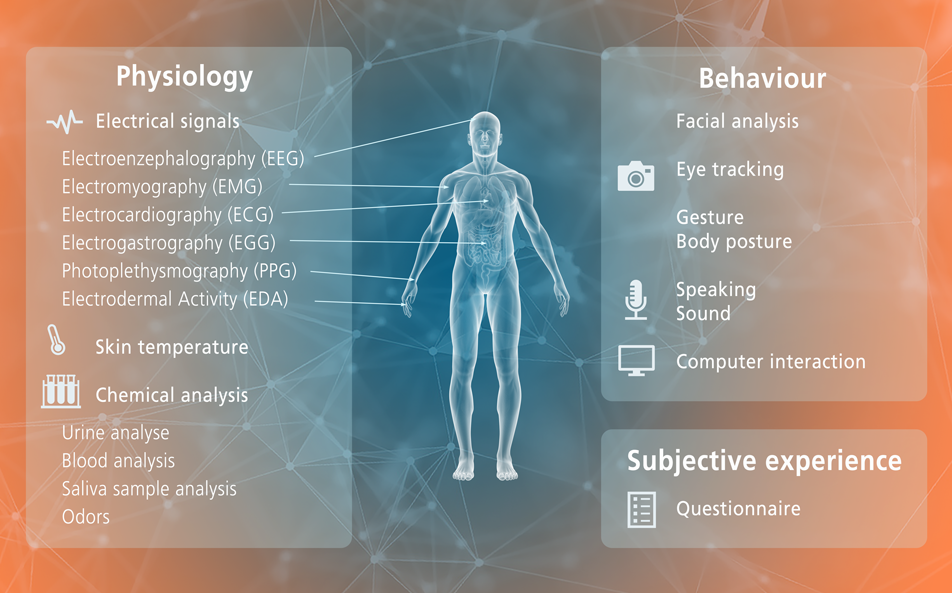

As emotions manifest in recognizable and stereotyped behavioral patterns, we have a prerequisite for standardized data acquisition and analysis methods. Because of the complex nature of emotions, we depend on multimodal data acquisition and measuring tools to capture the individual components:

- measuring physiological components through electrical signals (e.g., EEG or ECG), skin temperature, or chemical analysis (of urine, blood, saliva, or odor),

- measuring behavioral components through facial analysis, eye tracking, or the analysis of gestures, body posture, and how we sound,

- measuring subjective components (“feelings”) through questionnaires.

Emotion measurement methods are based on the above mentioned categorical or dimensional approach. Complex psycho-physiological affective states, for example, are assessed by classifying valence and arousal, i.e., through a dimensional approach. The labelling of discrete emotions, on the other hand, is based on the categorical approach.

One way to measure behavioral signals is the analysis and categorization of facial expressions using the Facial Action Coding System (FACS). FACS involves identifying and coding specific facial muscle movements, known as action units (AUs), which correspond to different facial expressions (see AU examples in the original FACS edition of Ekman & Friesen 1978). The AUs recorded during a predefined time period are then compared to the patterns that are typical for individual emotions. While FACS provides a standardized and objective way to analyze facial expressions, it does not “reveal” the test subject’s emotions. It’s a framework to recognize and categorize facial movements, and can thus serve as the starting point for pattern recognition algorithms in facial analysis and emotion recognition software, for example.

What a feeling: Why we have emotions — and what they can be good for

Emotions are not just a vital part of the human experience, they also have important functions: Emotions enhance our attention, they affect our decision-making, they motivate us, and we memorize emotional events and items better than non-emotional ones (ask any mnemonist).

What is intriguing about emotions is that they are not susceptible to our intentions (try being not disappointed over this year’s birthday present, for example, or at least try not showing it). We even often mispredict how we would feel (or act) in a hypothetical situation, a phenomenon dubbed “failure of affective forecasting”.

It’s the unconscious, almost uncontrollable aspect of emotions that makes emotion analysis interesting to various real-world applications. Market research, for example, mostly relies on asking test subjects about their post-hoc rational motivation to choose or not to choose a product. While this helps businesses understand how their target audience makes informed decisions, it only reveals how they feel about the product, service, or user experience to some extent. If you’ve ever participated in a user feedback analysis, you know how hard it is to access your sentiments and emotions in retrospect. By integrating facial analysis software capable of emotion analysis, market research tools can also provide life, direct, and unadulterated insights into users’ emotional reactions.

Especially when it comes to usability tests, real-time emotion analysis is an asset. Traditionally, EEG caps have been used to measure valence and arousal levels during user testing, for instance for gaming software usability. This would require participants to wear the EEG cap, which can be error-prone and time-consuming for them if they are doing the testing at home. State-of-the-art facial detection and emotion analysis software like SHORE® is not capable of reproducing the same level of accuracy and precision when measuring cognitive processes like an EEG cap would. However, measuring valence (and potentially arousal) purely through contact-free, easy-to-apply camera-based methods suffices in certain application scenarios and lowers the entry bar for affective technology usage.

Another application area for emotion analysis is health and well-being: FACS is a proven method to monitor and analyze patients who are unable to communicate pain episodes verbally due to cognitive impairments, for example. Another setting, where real-time emotional analysis can be beneficial is driver monitoring. It can help analyze the driver’s well-being and comfort, and to detect signs of discomfort, stress, or cognitive overload – before accidents happen.

In these use cases, the application of facial recognition and emotion analysis has the potential to dispel some of the sci-fi feel that surrounds these technologies. Instead, they emerge as valuable tools that contribute insightful data, enhancing various applications where social and emotional intelligence are an important factor.

Add comment